AWS Naming Standards: Introduction

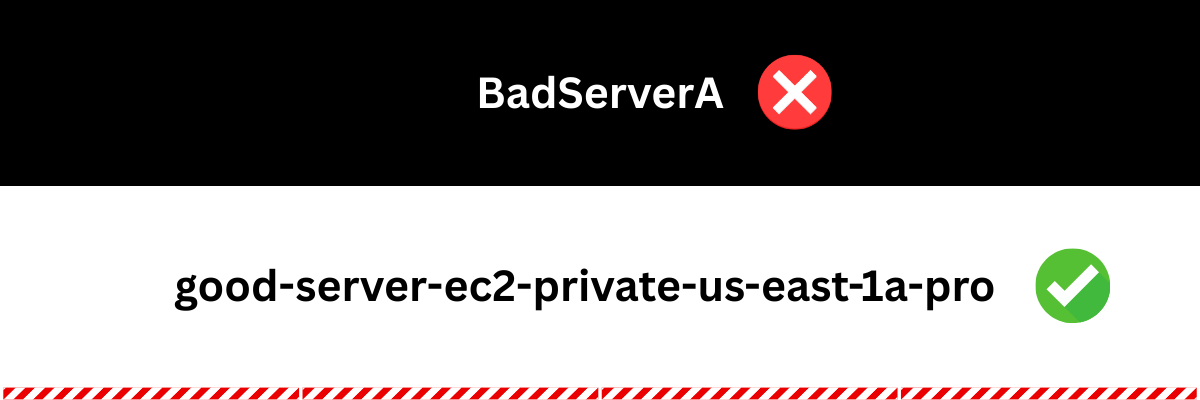

Why do we keep naming things badly?

Simple: there’s usually no easy system to follow. Half process, half convenience. If it’s painful to come up with names, people will just wing it.

Also, are you really going to open up an Excel sheet every time just to figure out the “right” format for something like this:

my-super-app-ec2-private-us-east-1-proThe thought alone is exhausting, let alone doing it repeatedly.

However, it has to be done!

Because if not, you end up in the mess you most likely have experienced in the past..

I know what you’re thinking now, tagging! Tag them all!

Sure,

that should absolutely be part of your compliance. But tagging is just labeling. It doesn’t replace naming your resources, it complements them and makes things more machine-friendly.

Though tagging may be essential for automation and CI/CD, let’s be real, when was the last time you actually clicked through pages just to read the tags?

So,

a structured AWS Resource Naming Convention ensures that every S3 bucket, EC2 instance, VPC, or Lambda function can be programmatically identified, consistently tagged, and mapped back to its business or technical purpose.

For engineers, I am afraid this isn’t even optional, it’s a prerequisite for reliability, security, and cost management.

Below are the KEY naming standards you need to account for when designing a solid AWS Naming Scheme.

1. Uniqueness

In this section, I’ll show you why unique names matter, what you can do about it, and the key long-term benefits of getting it right.

Key AWS Naming Standard Numero Uno: Resource names must be unique within their provider-specific scope.

..and this is not only AWS, this is the law of the Clouds Universe:

- AWS: S3 bucket names must be globally unique (AWS S3 Bucket Naming Rules).

- GCP: Project IDs must be globally unique (GCP Managing Projects).

- Azure: Storage Accounts require DNS-unique names (Azure Resource Naming).

In most cases, resource names in AWS are just labels for humans — they don’t enforce uniqueness beyond an account/region. But here’s where things get messy: imagine you have multiple AWS accounts, no naming convention, and two resources with the exact same name, say: “My Very Nice Lambda”.

You can probably see where this is going:

- Cross-account invocation → Which function are you actually calling?

- EventBridge rules/triggers → Collisions create ambiguity.

- Monitoring & logging → Dashboards and alerts show duplicate names with no context.

- CI/CD pipelines → Risk of deploying or overwriting the wrong resource.

Bottom line: Duplicate names won’t break AWS, but they will break your operations.

Key Long-term benefits

1. Reduced Operational Risk

- Avoid accidental deployments, overwrites, or deletions.

- Prevent cross-account invocation confusion.

2. Clearer Monitoring and Logging

- Dashboards and logs immediately indicate which resource is which.

- Alerts and metrics are easier to interpret without ambiguity.

3. Easier Automation

- CI/CD pipelines, Terraform, CloudFormation, and Lambda triggers work reliably.

- Scripts and tools can reference resources programmatically without guesswork.

4. Improved Collaboration

- Teams can work across accounts, regions, and projects without constant explanations.

- New team members quickly understand resource purpose and scope.

5. Scalable Architecture

- As your cloud footprint grows, naming standards keep things organized.

- Supports multi-account, multi-region setups without collisions.

6. Faster Troubleshooting

- When something breaks, the resource name itself provides context.

- Reduces time spent searching for the right resource in large environments.

7. Better Compliance & Auditing

- Naming conventions help trace ownership, environment, and application.

- Makes audits and security reviews easier and less error-prone.

Best practice: Bake uniqueness into your naming schema. Use application codes, environment identifiers, region codes, and account prefixes so that every resource can be clearly tied back to its origin.

Example:

[acc_id]-taytly-app-lmb-private-us-east-1-proThis is pretty damn unique to me!

2. Length Compliance

Length in naming has been a problem since forever, not just for AWS resources. Think about all the classes, methods, and variables you’ve seen stretch on for miles, lighting up PMD like a Christmas tree. In this section, we’ll dive into the key practices that keep you on the safe side

Providers enforce strict length constraints. Exceed them, and deployments fail. Your life gets miserable!

- AWS: EC2 tag values allow up to 255 characters (AWS EC2 User Guide).

- Azure: Storage Account names = 3–24 chars.

- GCP: Bucket names = 3–63 chars.

But it’s not just about staying within character limits, structured AWS resource names are meant to be human-readable.

If they aren’t, you might as well skip names entirely and rely only on tags, which quickly become a mess to manage.

It’s interesting that AWS allows some services to have extremely long names.

Honestly, I can’t see the point, and I hope there’s one. In practice, long names usually bring more trouble than benefit: harder to read, harder to type, and harder to automate.

Best practice: Keep names concise but descriptive, don’t even think about maxing them out.

Short, meaningful names leave room for automation to append prefixes, suffixes, or identifiers without breaking your naming scheme.

This simple discipline makes querying, scripting, and cross-account operations far easier in the long run.

If possible, create a simple validation mechanism to flag names that exceed a certain length, say, 65 characters. This way, you at least have a team-aligned limit that keeps things readable and scalable, instead of letting names grow uncontrollably.

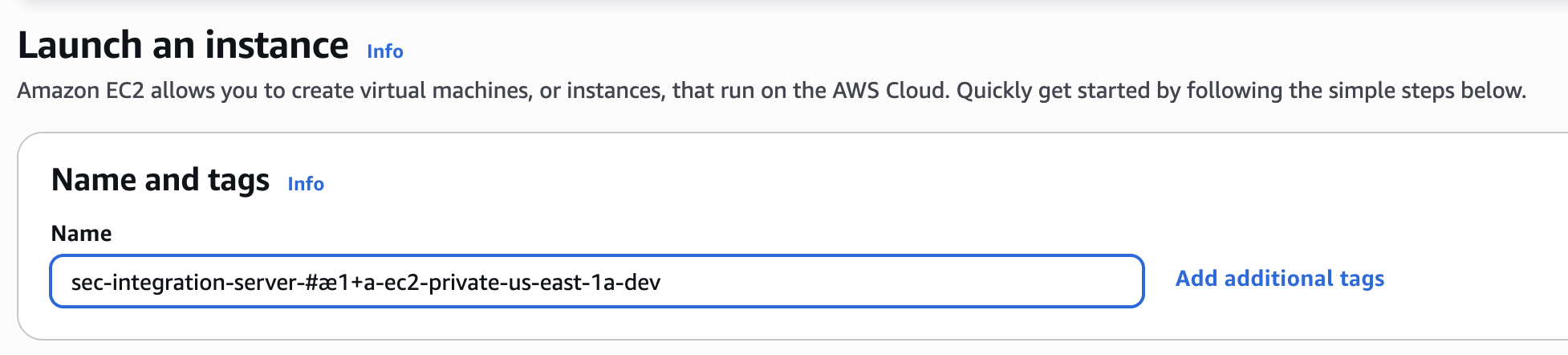

3. Character Compliance

Different services enforce different character rules, and breaking them can block provisioning. In this section, we’ll cover the basics of safe, compliant naming, avoiding special characters, uppercase letters, and spaces to keep names human-readable and error-free.

Naming rules differ per service, and violations block provisioning.

- AWS S3: lowercase letters, numbers, dashes only.

- Azure: varies by service, but generally alphanumeric + dashes.

- GCP Project IDs: lowercase letters, digits, hyphens.

Even though some AWS services technically allow special characters in resource names, that doesn’t mean you should use them.

Remember: names are for humans to read and understand.

The safest and clearest approach is to stick to plain English, ASCII subset characters (A–Z, a–z) and digits (0–9).

Best practice: Keep it realy simple, avoid uppercase, special characters, and spaces—even if technically allowed. Stick to a reduced set: a-z, 0-9, -, _.

4. Standardization

Structured and standardized names work only if everyone follows the same schema. In this section, I’ll show why consistent AWS naming conventions are so hard to stick to, how the complexity explodes across services and regions, and what you can do to make it manageable and enforceable across your teams.

Programmatic parsing requires a schema that everyone follows.

A common AWS Resource Naming Convention is:

{Application}{Resource}{Environment}{Region}Example:

crm-ec2-prod-us-east-1However,

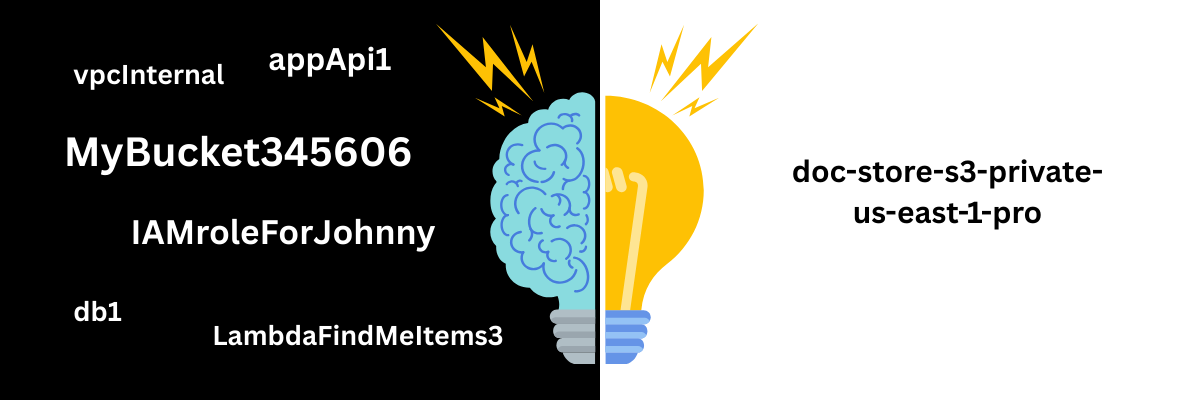

this has been one of the hardest things for teams to stay consistent with.

Everyone loves the idea at the start—sit down, align on a clean, detailed naming structure, agree on what to include, and enjoy a momentary bliss about how descriptive and smart it looks.

And then…

everyone fails to comply to it!

Why?

Because it’s impossible to keep up with. You can’t memorize it, and maintaining some massive spreadsheet of every possible combination and order is pure overhead.

So what happens? People quit.

But let me show you just how massive this problem really is.

Say you want that “perfect” detailed structure, so you include:

- Resource type (EC2, NAT, S3… about 800 AWS services and sub-services)

- Visibility (private, public, isolated, etc.)

- Region (around 35)

- Availability Zone (average of 3 per region)

- Environment (dev, sandbox, test, staging, UAT, prod, shared, training, hotfix, etc. — let’s say 9)

And that’s not even touching prefixes for business units or suffixes for special cases.

Do the math: 800 × 3 × 35 × 3 × 9 = 2,268,000 possible combinations.

Yeah, over two million, and the count climbs every time AWS ships another service.

Let that sink in.

No wonder AWS folks look half-cooked whenever naming standards come up.

Anyways,

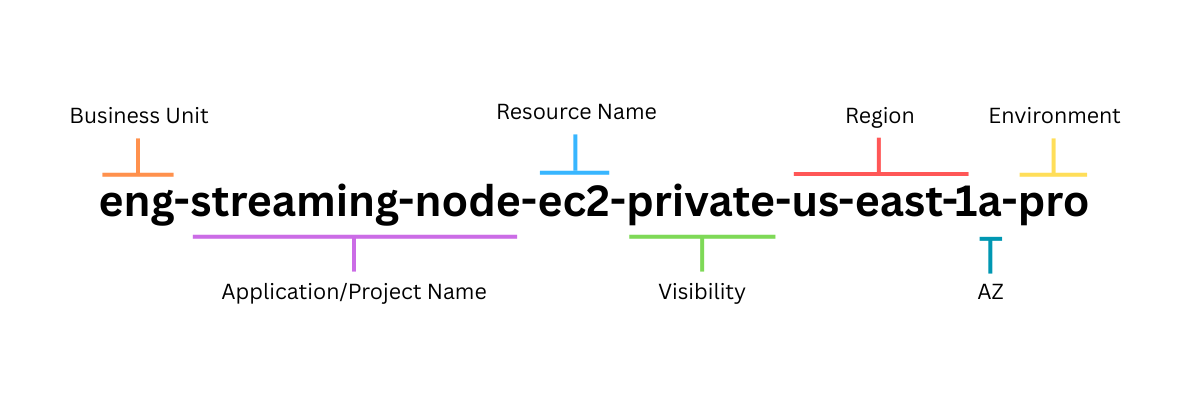

At Taytly we went with something like this:

{ApplicationCode}{ResourceCode}{VisibilityCode}{RegionCode}{AvailabilityZoneCode}{EnvironmentCode}It gives us some more structure, but also the flexibility to switch components on or off when we need to.

Likely, we don’t have to remember anything, Taytly provides the full structure in just a few clicks.

Best practice: Apply this pattern globally across accounts and teams. Enforce it through IaC modules, CI/CD checks, or an internal AWS Naming Tool.

5. Operability

Good names aren’t just decoration, they’re fuel for automation. In this section, I’ll show how clear, consistent naming makes CLI queries, Terraform states, and CloudFormation diffs faster, safer, and far less painful, and what happens when names are sloppy.

Good names aren’t just “nice to have.” They directly impact how you work every single day.

CLI queries: With clear, consistent names, your AWS CLI commands are faster and easier to write.

You can target resources with a single filter instead of scrolling through endless JSON blobs.

Poor names force you into trial and error—or worse, manual clicks in the console.

Terraform states: Descriptive, predictable names make your state files clean and maintainable.

You can instantly spot which resource belongs where.

Bad names?

They blur environments, overlap services, and increase the risk of unintentional terraform destroy nuking the wrong thing.

CloudFormation diffs: When names are structured, you can tell at a glance what changed in a stack update.

If they’re sloppy, diffs turn into a wall of noise and you lose trust in the automation.

Bottom line: naming is automation fuel. Good names support efficient use of grep, jq, and AWS CLI filters. Bad names turn every query into detective work and every deploy into a gamble.

By the look on your face, I can tell that’s a hard no.

6. Semantic Clarity

Names should carry meaning at a glance. In this section, I’ll show how to encode key metadata into resource names so engineers instantly know what they’re looking at, why it matters for troubleshooting, and how a consistent approach keeps teams aligned across accounts and services.

Names should encode metadata that engineers can read at a glance. For example:

app-api-lmb-private-us-east-1-proTells you:

- Application: App API

- Resource: Lambda (lmb)

- Visibility: Private

- Region: us-east-1

- Environment: Production

This removes ambiguity and speeds up troubleshooting across shared accounts.

Now, though it is quite easy to recognize the regions, AZs, and environments, creating semantically clean resource name is a story on it's own. As I mentioned previosly, if you need to make this scalable, and applicable across all your teams, you need a mega spreadsheet with all AWS services, and their abbreviations.

Here are few suggestions:

- Lambda -> lmb

- Simple Storage Service (S3) -> s3

- Elastic Compute Cloud (EC2) -> ec2

That was easy, now some exciting ones:

- VPC Endpoint - Cassandra Streams -> vpce-cass

- Simple Notification Service (SNS) Topic FIFO -> snsfifo

You get where I’m going with this!

Your names have to be crystal clear and carry meaning everyone can understand.

When it comes to solid AWS Naming Standards, nothing beats a perfectly aligned team!

7. Provider Constraints

Finally, you made it to the end. But honestly, this is just the beginning. Many organizations today are multi-cloud, and your job as an engineer isn’t getting any easier. That’s why understanding provider-specific constraints is absolutely critical.

Keep provider-specific quirks in mind:

Case sensitivity: AWS IAM is case-sensitive, S3 is not.

Immutability: Some names (e.g., S3 buckets, Azure Storage Accounts) cannot be changed after creation.

Scope: Some resources require global uniqueness; others only within account or region.

Best practice: Validate naming rules in automation pipelines before resource creation.

Consider a tool to help you create unique names based on compliant naming standards.

Conclusion

For AWS engineers and architects, a naming convention is infrastructure.

Defining and aligning on AWS Naming Standards is just as critical as VPC design, IAM strategy, or any other foundational decision.

Done right, it becomes the backbone of your cloud operations.

You get real, tangible benefits:

- Faster debugging: Resource names carry context, so you instantly know what you’re looking at, no more guessing across accounts or regions.

- Reliable automation pipelines: CI/CD, Terraform, CloudFormation, and scripts work predictably because names are consistent and programmatically parsable.

- Cleaner audit and compliance reports: Meaningful, structured names make ownership, environment, and purpose immediately clear.

- Sustainable scalability across teams: Multi-account, multi-region, and even multi-cloud setups stay organized without endless spreadsheets or tribal knowledge.

If you don’t already have one, define your AWS Resource Naming Convention now.

Use these AWS naming standards as your go-to guidance!

Document it, enforce it with IaC modules, CI/CD checks, or internal tooling, and make it a standard part of your DevOps workflow.

Remember: sloppy names won’t break AWS, but they will break your operations. Invest the time now, and your future self (and team) will thank you.

Read Next: (AWS Naming Convention: Hidden Costs).

![7 Key AWS Naming Standards [Critical for Engineers and Architects]](/images/blog/cover-naming-standards.png)